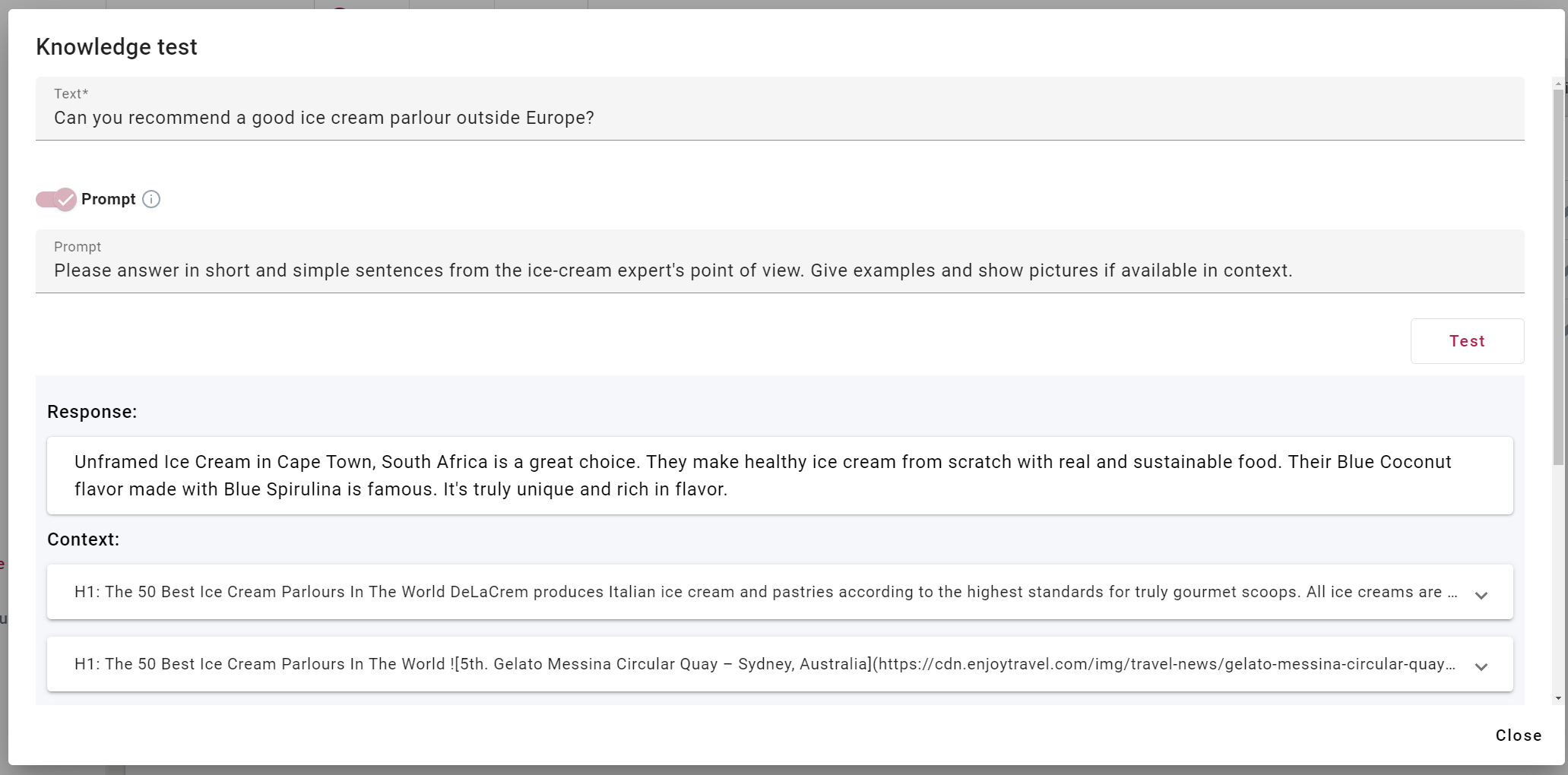

Knowledge test, prompt

In the Knowledge base training view, under Other, you can check the answer given by the text generation model to a specific question.

- Click on Knowledge test.

- In the text field, type the question you want the text generation model to answer.

- In the prompt field, specify a prompt if necessary. This is optional and only needed if you plan to add a specific prompt to your virtual assistant.

- Click Test.

- The generated text appears next to the Response.

- The Context section shows the data used by the text generation model to create the response, including similarity scores, headings, file locations, URLs, and file names.

Prompt

A prompt consists of additional instructions and commands, presented in a descriptive manner, for the automatic text generation model to generate the desired output.

The text generation model within the virtual assistant operates with its own default system prompt, making the inclusion of a custom prompt in the scenario optional. However, you can create and include your own prompt if a virtual assistant scenario necessitates it—for example, when specific instructions are needed regarding the language style or sentence structure for the virtual assistant's responses.

Creating an effective prompt entails significant effort and experimentation. We recommend creating a detailed prompt and specifying contextual conditions that the text generation model should consider when generating a response. Before incorporating a prompt into a scenario, its impact can be evaluated in the knowledge test by entering the prompt text in the Prompt field.

If you choose to use your custom prompt with a virtual assistant, add it to the Value field in the Outputs view for the output of the identifier system_text_prompt.

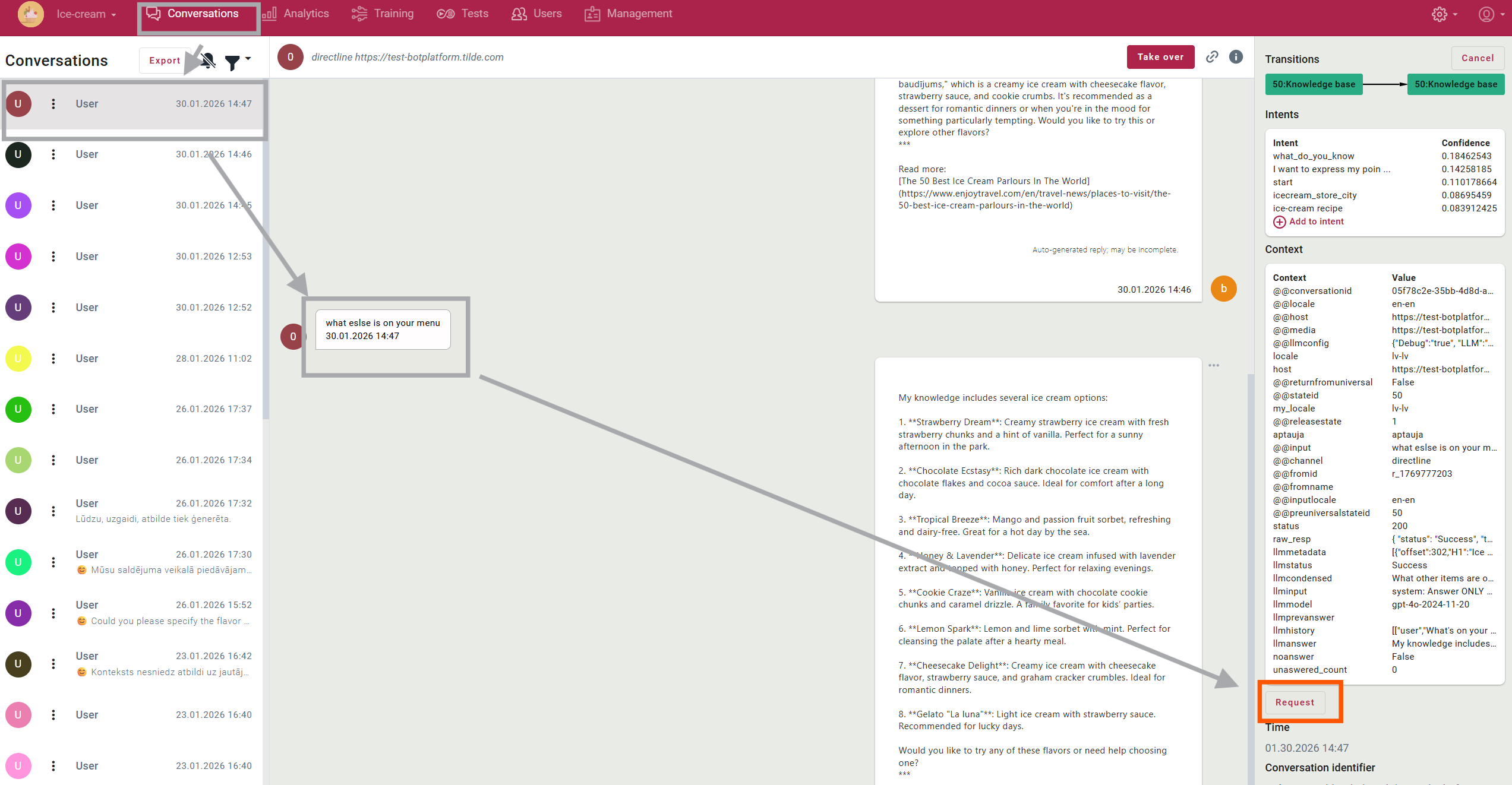

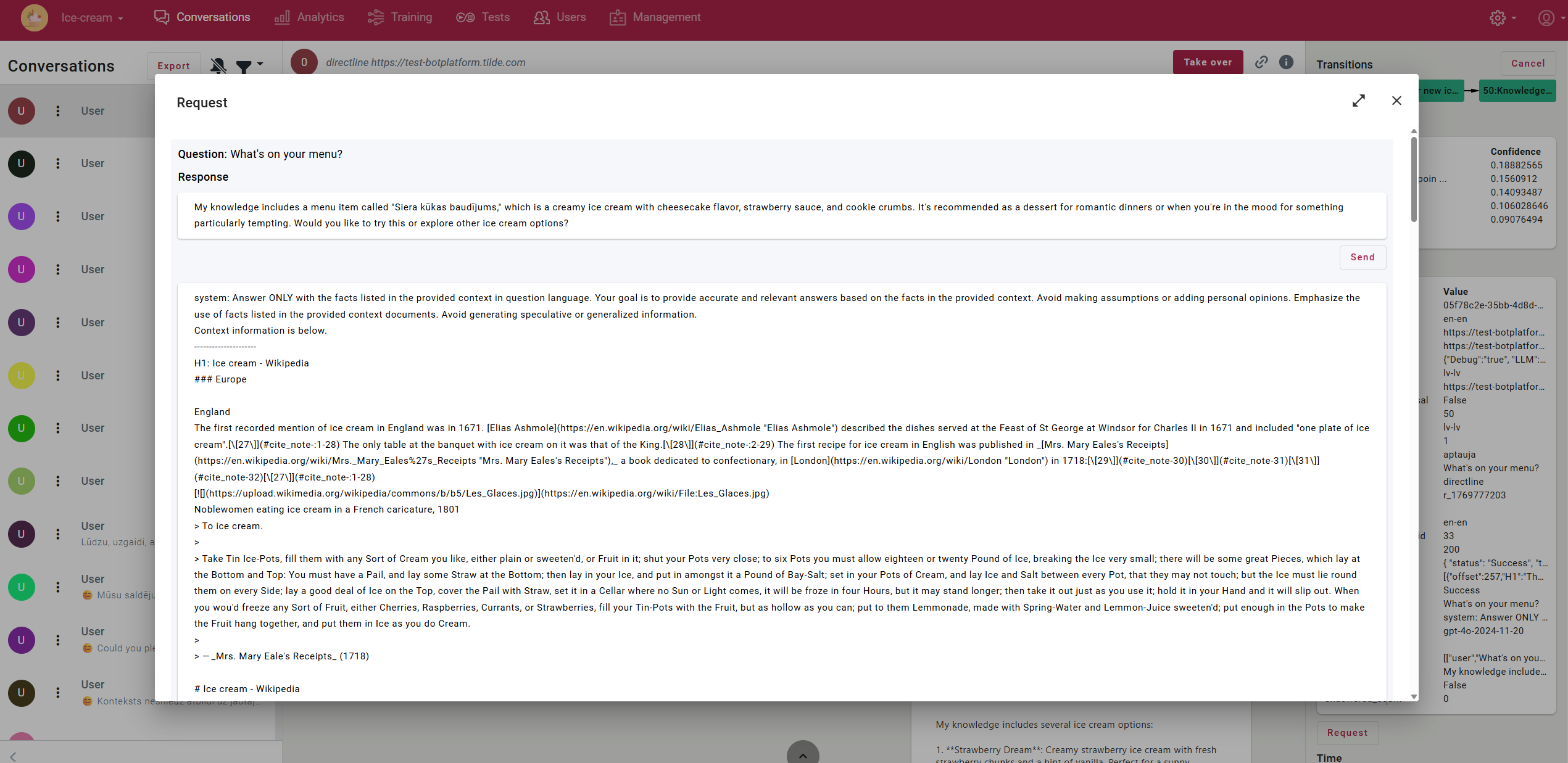

Request

In the Conversations view, by opening one of the conversations handled by the virtual assistant and clicking on the user input, you can view the request that was sent to the automatic text generation model.

The request contains the prompt, selected segments, and the conversation history. By clicking Send, the request is sent to the large language model, and the generated response is displayed in the Response field. This window can be used when working with automatic text generation by modifying the request data and trying out other requests.

If, when modifying the request data, a notification appears stating that the request text must include the user’s question, make sure it is included in the following format:

user: here is the user’s question

assistant:

Read more